The xAI PromptIDE is an integrated development environment for prompt engineering and interpretability research. It accelerates prompt engineering through an SDK that allows implementing complex prompting techniques and rich analytics that visualize the network's outputs. We use it heavily in our continuous development of Grok™.

We developed the PromptIDE to give transparent access to Grok-1, the model that powers Grok™, to engineers and researchers in the community. The IDE is designed to empower users and help them explore the capabilities of our large language models (LLMs) at pace. At the heart of the IDE is a Python code editor that - combined with a new SDK - allows implementing complex prompting techniques. While executing prompts in the IDE, users see helpful analytics such as the precise tokenization, sampling probabilities, alternative tokens, and aggregated attention masks.

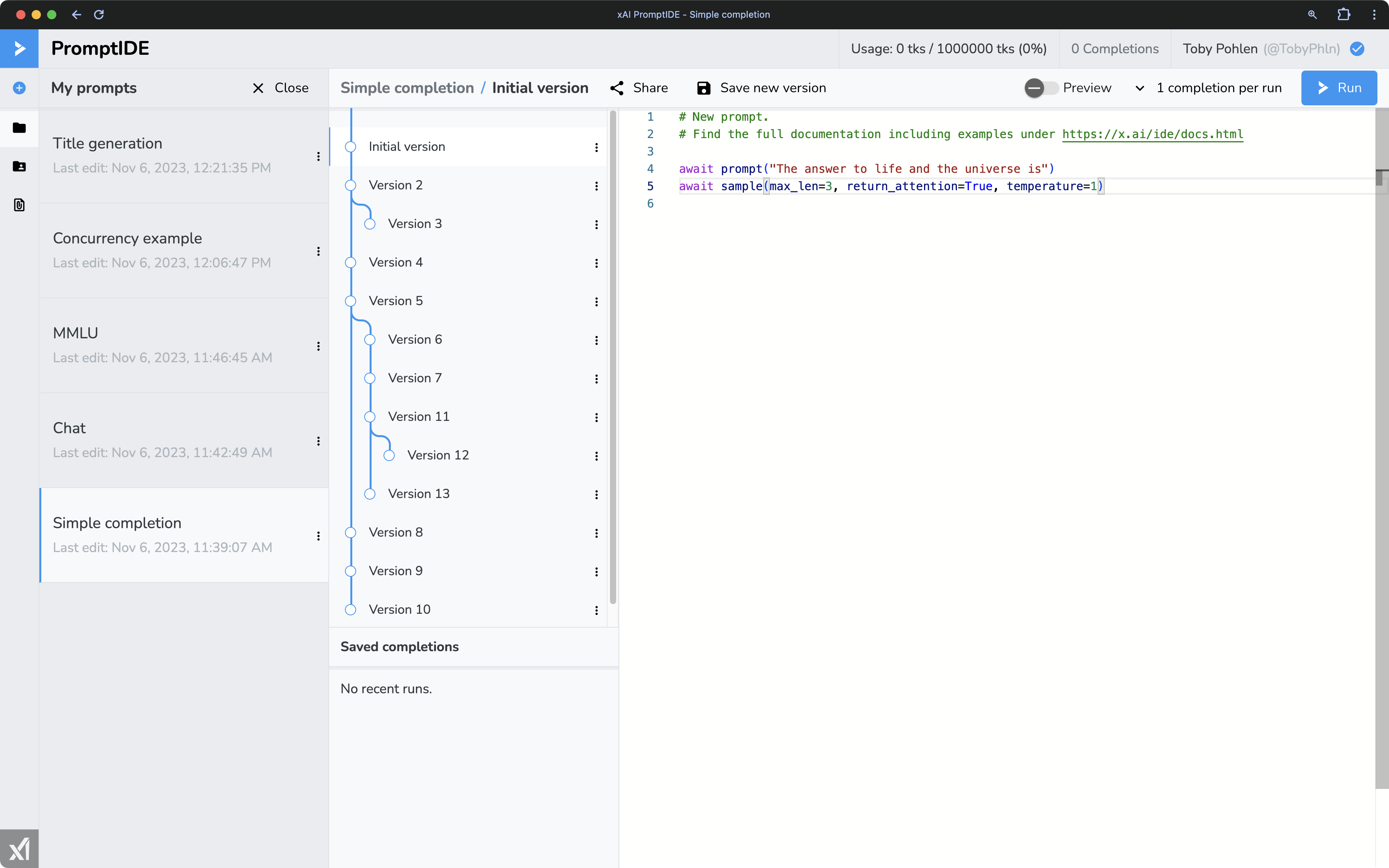

The IDE also offers quality of life features. It automatically saves all prompts and has built-in versioning. The analytics generated by running a prompt can be stored permanently allowing users to compare the outputs of different prompting techniques. Finally, users can upload small files such as CSV files and read them using a single Python function from the SDK. When combined with the SDK's concurrency features, even somewhat large files can be processed quickly.

We also hope to build a community around the PromptIDE. Any prompt can be shared publicly at the click of a button. Users can decide if they only want to share a single version of the prompt or the entire tree. It's also possible to include any stored analytics when sharing a prompt.

The PromptIDE is available to members of our early access program. Below, you find a walkthrough of the main features of the IDE.

Thank you,

the xAI Team

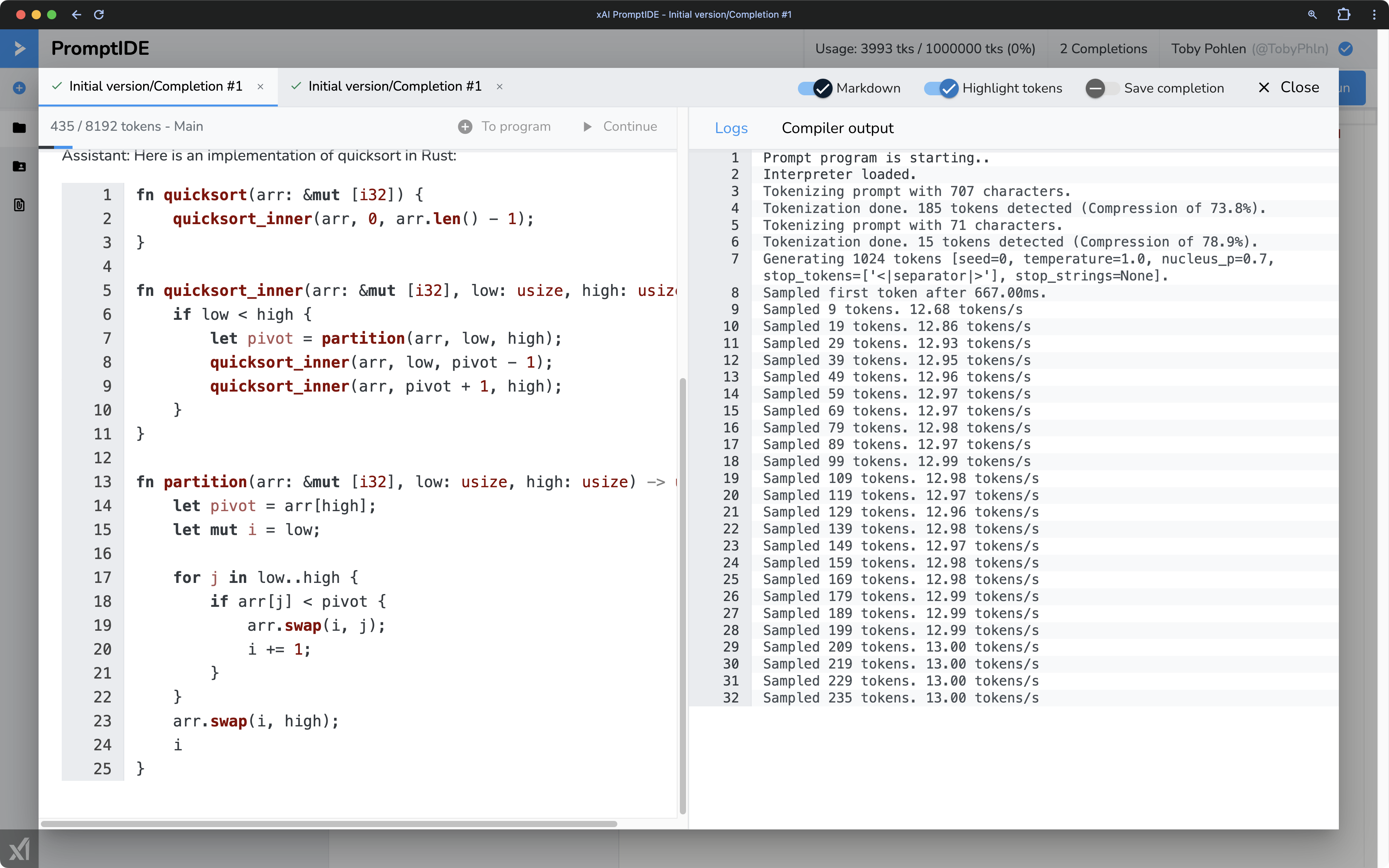

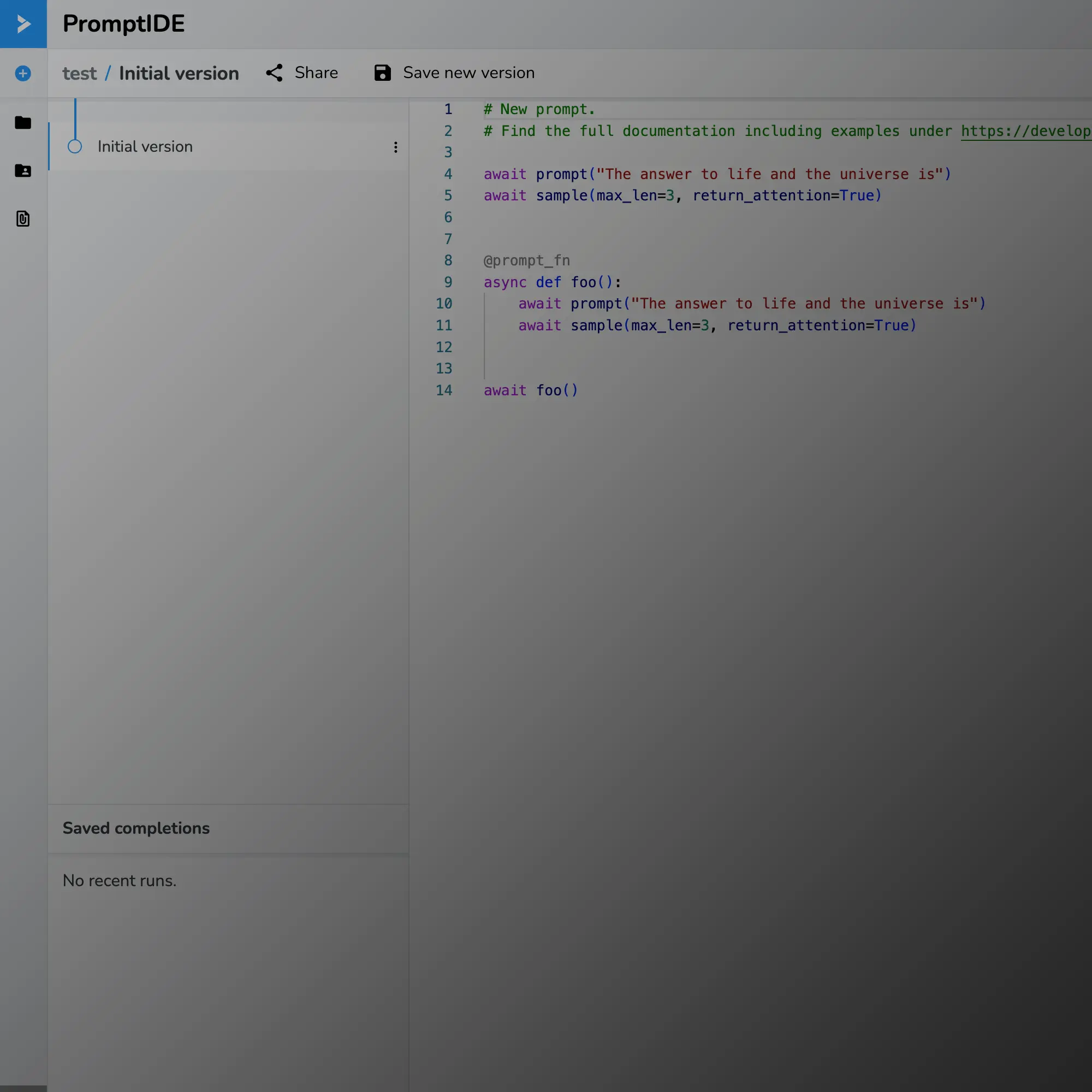

Code editor & SDK

At the heart of the PromptIDE is a code editor and a Python SDK. The SDK provides a new programming paradigm that

allows implementing complex prompting techniques elegantly. All Python functions are executed in an implicit context,

which is a sequence of tokens. You can manually add tokens to the context using the prompt() function or you can

use our models to generate tokens based on the context using the sample() function.

Code is executed locally using an in-browser Python interpreter that runs in a separate web worker. Multiple web workers can run at the same time, which means you can execute many prompts in parallel.

Complex prompting techniques can be implemented using multiple contexts within the same program. If a function

is annotated with the @prompt_fn decorator, it is executed in its own, fresh context. The function can perform

some operations independently of its parent context and pass the results back to the caller using the return

statement. This programming paradigm enables recursive and iterative prompts with arbitrarily nested sub-contexts.

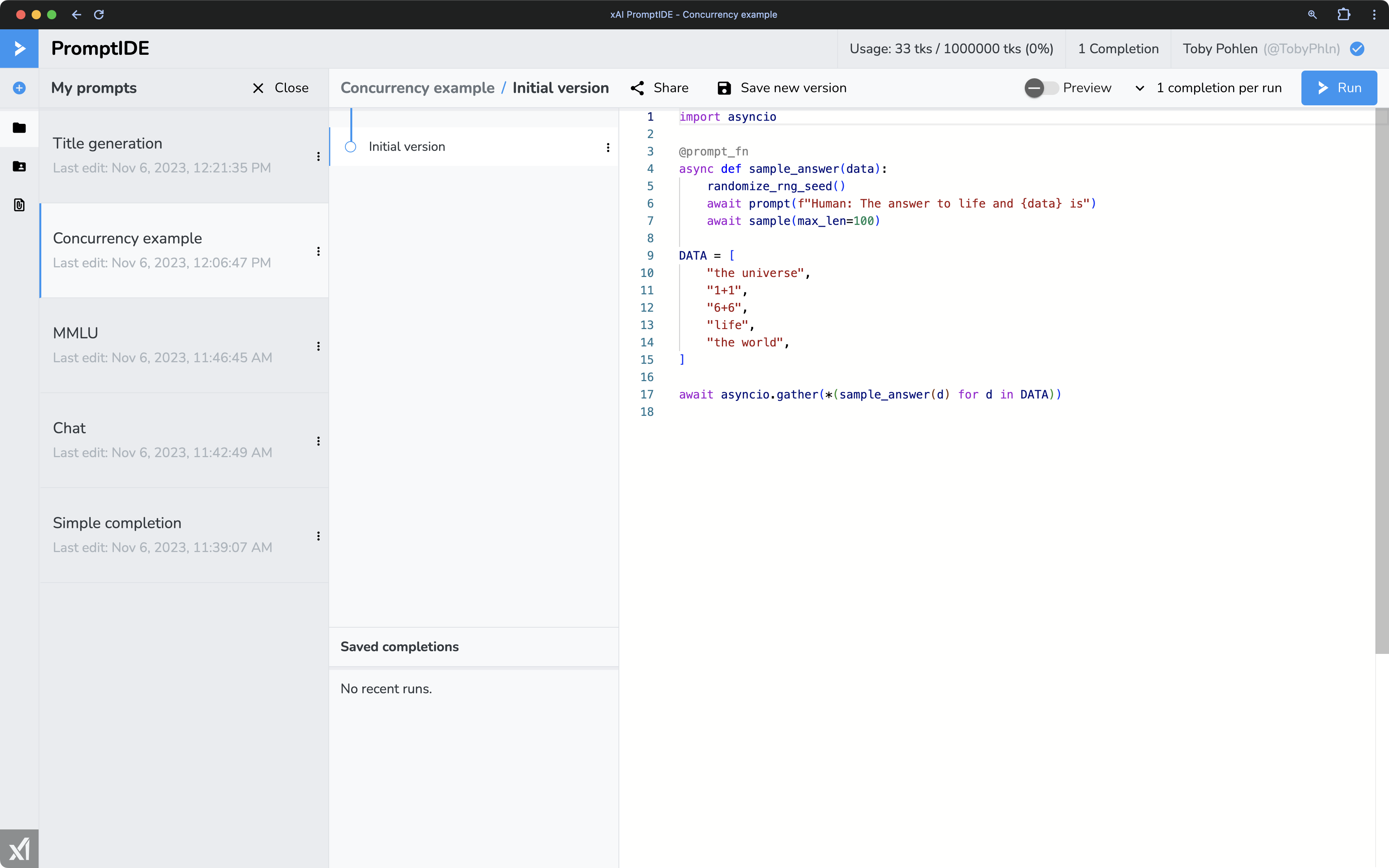

Concurrency

The SDK uses Python coroutines that enable processing multiple @prompt_fn-annotated Python

functions concurrently. This can significantly speed up the time to completion - especially when working with

CSV files.

User inputs

Prompts can be made interactive through the user_input() function, which blocks execution until the user has

entered a string into a textbox in the UI. The user_input() function returns the string entered by the user,

which cen then, for example, be added to the context via the prompt() function. Using these APIs, a chatbot

can be implemented in just four lines of code:

await prompt(PREAMBLE)

while text := await user_input("Write a message"):

await prompt(f"<|separator|>\n\nHuman: {text}<|separator|>\n\nAssistant:")

await sample(max_len=1024, stop_tokens=["<|separator|>"], return_attention=True)

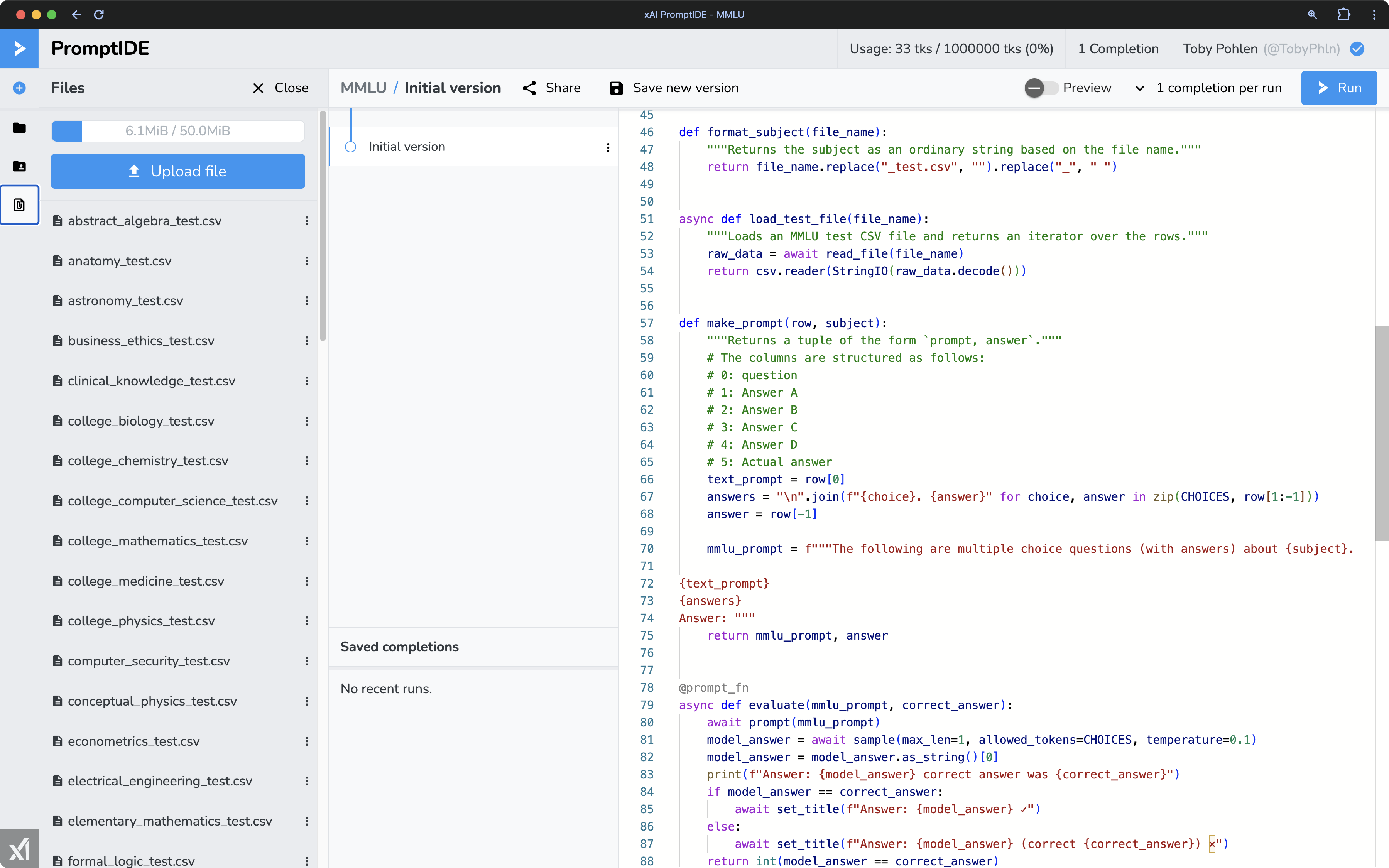

Files

Developers can upload small files to the PromptIDE (up to 5 MiB per file. At most 50 MiB total) and use their

uploaded files in the prompt. The read_file() function returns any uploaded file as a byte array. When combined

with the concurrency feature mentioned above, this can be used to implement batch processing prompts to evaluate

a prompting technique on a variety of problems. The screenshot below shows a prompt that calculates the MMLU

evaluation score.

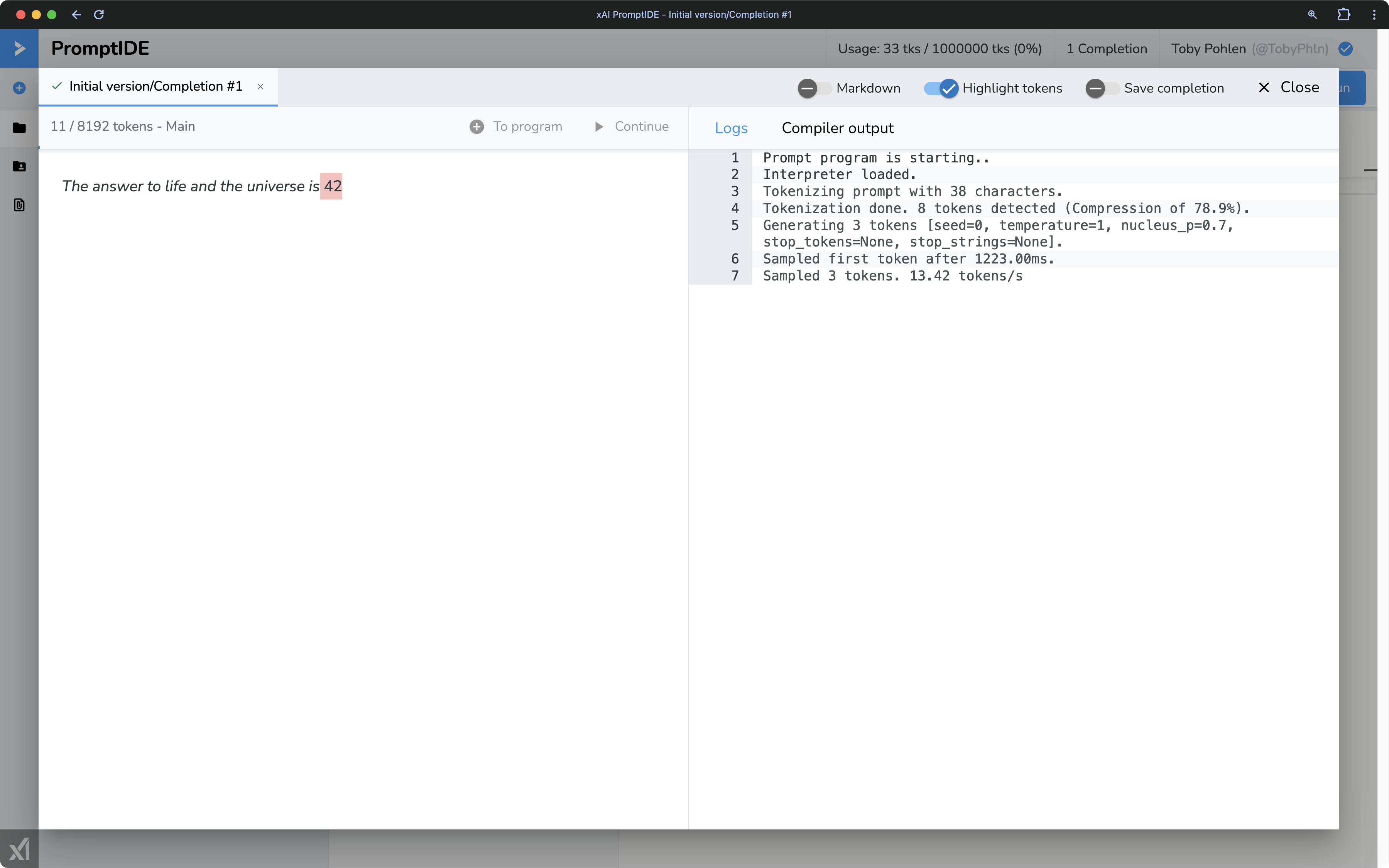

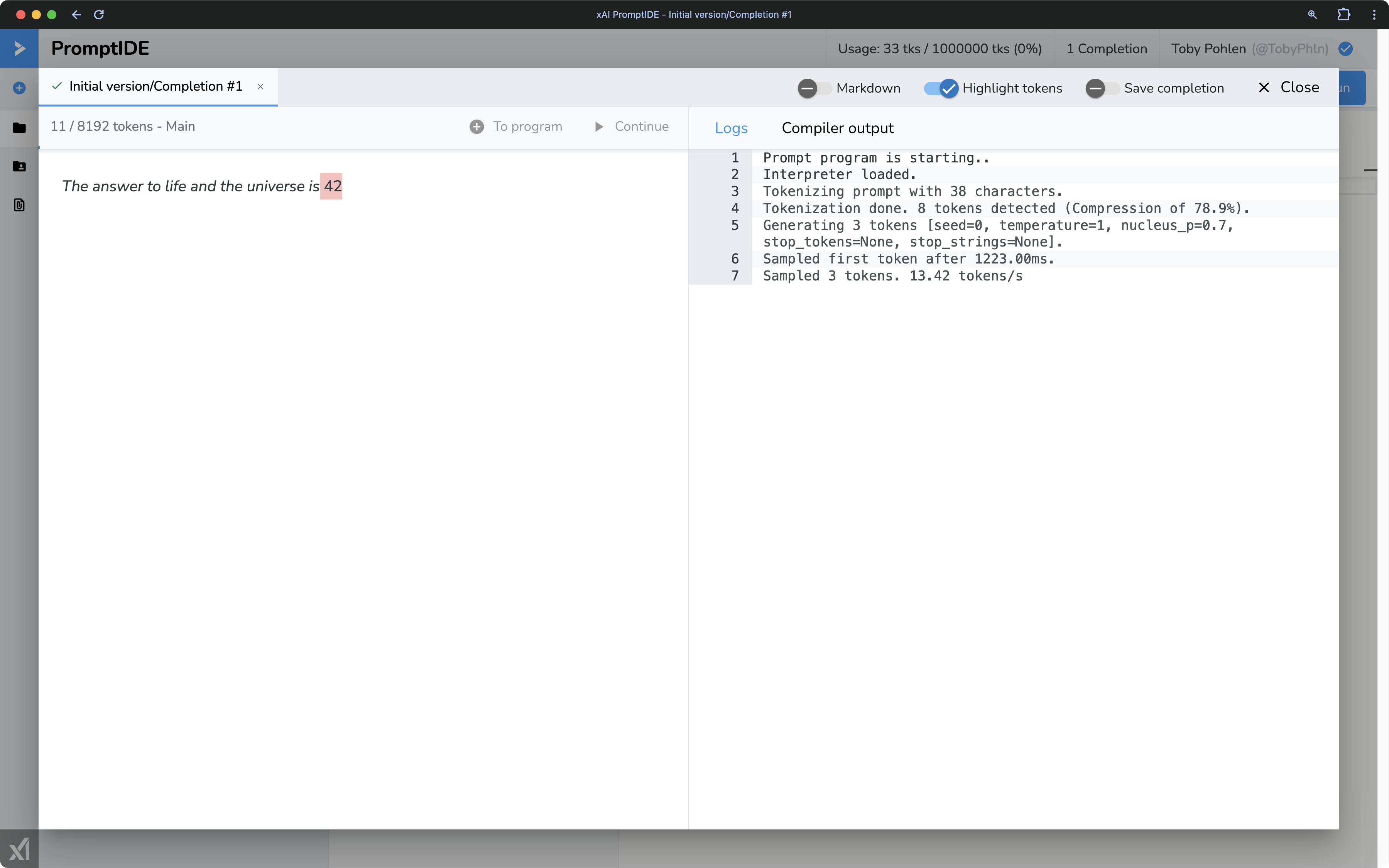

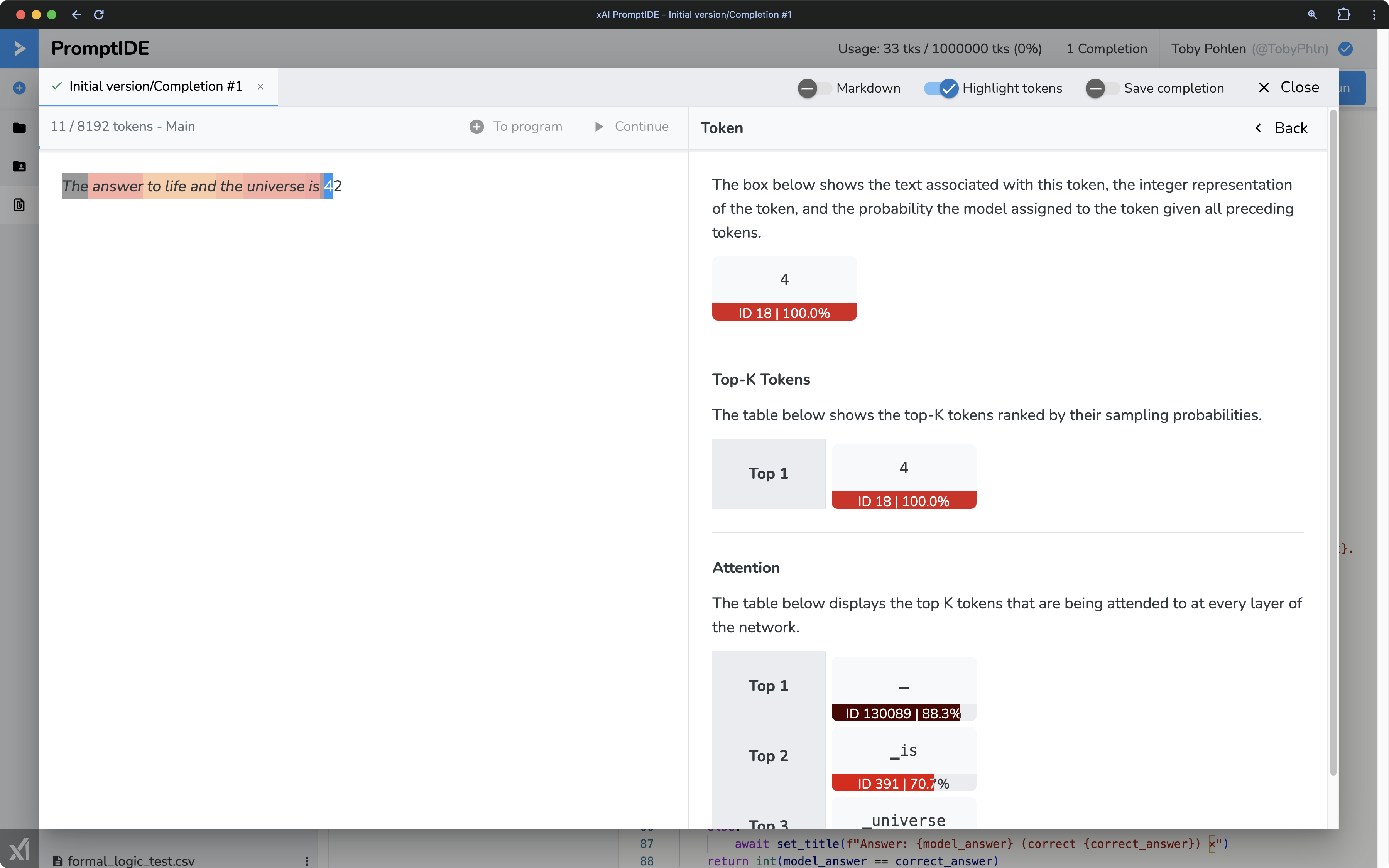

Analytics

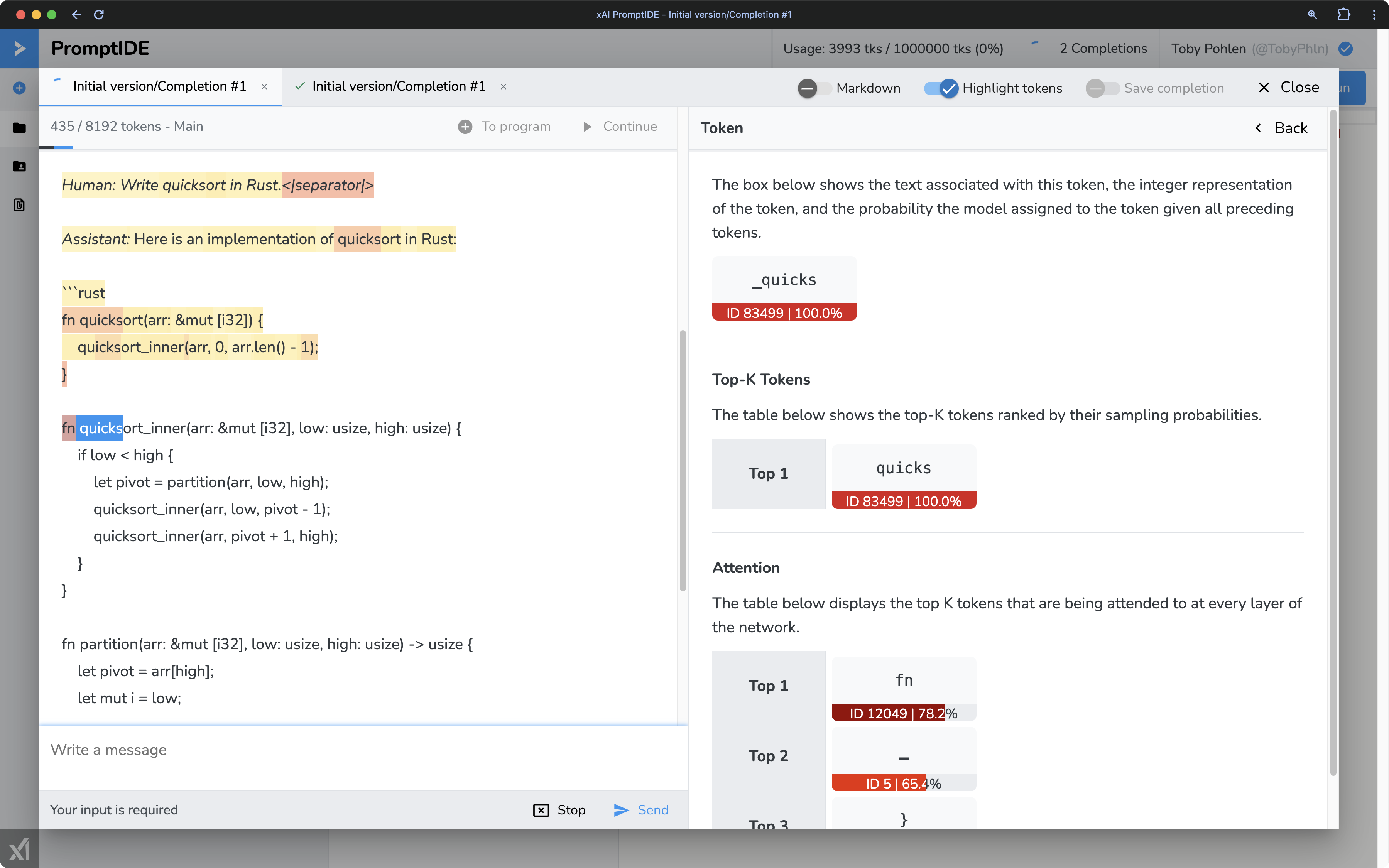

While executing a prompt, users see detailed per-token analytics to help them better understand the model's output. The completion window shows the precise tokenization of the context alongside the numeric identifiers of each token. When clicking on a token, users also see the top-K tokens after applying top-P thresholding and the aggregated attention mask at the token.

When using the user_input() function, a textbox shows up in the window while the prompt is running that users can enter

their response into. The below screenshot shows the result of executing the chatbot code snippet listed above.

Finally, the context can also be rendered in markdown to improve legibility when the token visualization features are not required.